Intro

As I’ve mentioned previously, we’re using Phillips Hue and Home Assistant for most of our lighting. The Phillips Hue bridge is connected to Home Assistant, and I’m using the HomeKit Bridge integration to add the lights to Apple’s Home app – the bridge is not connected directly to HomeKit. This has been incredibly stable, however, it comes with a downside: we’re unable to use Apple’s adaptive lighting functionality.

Home Assistant, however, has its own integration for Adaptive Lighting. The documentation can be confusing, however, and it took me over six months to get it working the way I wanted to. Hopefully this post saves someone else some time!

Setting Up Adaptive Lighting

First off, you’ll want to install HACS and the Adaptive Lighting integration. HACS is like a third-party App Store: a lot of integrations are only accessible through that.

Then, in Home Assistant, go to Settings > Devices & services > Add integration. Search for Adaptive Lighting, then click to add it. Create your first entry. I recommend starting with one: I called mine Adaptive Lighting.

When presented with your options, here’s what to change:

- In the Lights section, add every light you want to be adaptive. For us, that includes almost every light in the house, except for the kitchen cabinet lighting and the outdoor lights (which we want to remain at specific colors).

- For Initial Transition, I’d recommend setting that to 0 seconds. This controls how quickly the lights adapt after turning them on.

- After some trial and error, I set Minimum Brightness to 25, and Maximum Brightness to 75. We found the default brightness to be too dim, especially in the early evening.

- I also set Minimum Color Temp to 2500, and the Maximum Color Temp to 5500. We found the default color temperature to be too orange.

- Since we have color lights almost everywhere in the house, I enabled Prefer RGB Color. This doesn’t seem to have any effect on lights that only support white. For color bulbs, however, it looks less harsh to us.

- Skipping almost all the way down, I enabled Detect Non-HA Changes.

Click Submit to save your changes. You should notice all of your lights change color and brightness almost immediately. Nice, right?

Adapting Everything

Unfortunately, it’s going to take some work to make this stick. In our case, I had to think about all of the ways we turn on our lights and make them compatible with adaptive lighting:

- We often use Apple’s Home app (or Siri) to turn on lights.

- We have scenes that turn on/off lights. Some of them are automated to happen at specific times (such as turning on the lights at sundown), and others happen on-demand (such as when we go to bed).

- We have a motion sensor at the top of the stairs that turns on the lights downstairs at night.

- We also have dimmer remotes in every room.

We wanted the flexibility of having adaptive lighting almost all of the time, but the ability to override that (say, making the lights brighter when cooking in the kitchen). Throughout the day, we wanted the lights to continue adapting – becoming bright white in the morning and afternoon, then dim orange in the evening. After a lot of reading, I was able to make that happen.

HomeKit

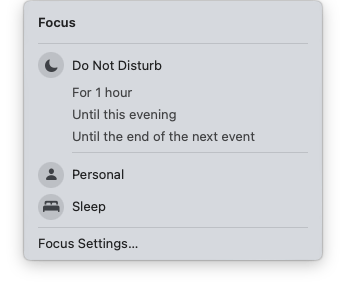

First, if you’re using the above settings for the Adaptive Lighting integration, HomeKit should work as expected. If you turn on a light, it’ll adjust for adaptive immediately. If you change a light’s color or brightness, it’ll stick.

Scenes

If you’re using scenes, the easiest way to handle those is to recreate them in Home Assistant. Same with any automations that run those scenes on a schedule. Don’t do any of that directly in HomeKit.

For your automations that trigger scenes, there are a couple of tricks to enable adaptive lighting. Here’s an example that uses our “Good Evening” scene, which happens an hour before sundown and turns on most of the lights in the house. In Home Assistant, go to Settings > Automations & scenes, then click the Create automation button.

When:

And if: (leave empty)

Then do:

What did we just do here? We told Home Assistant to activate the scene Good Evening an hour before sunset. Because scenes have specific colors for the lights they turn on, and we don’t necessarily want those, we’re disabling manual control of those lights so Adaptive Lighting sets the color and brightness for us. If a light is already off, it stays off, but if it’s set to be adaptive, and it’s on, it’ll adjust automatically.

It’s important to note that if a light is already on and adaptive, you’ll briefly see the scene kick in (and the light change color/brightness), then adaptive will take over again and revert the color/brightness. It’s a brief disruption that lasts under 5 seconds. I haven’t yet found a way around this, but I actually like the reminder that sundown is in an hour – it’s a good opportunity to go outside before it’s dark.

Motion Sensors

We have a motion sensor at the top of the stairs. When winding down in the evening, we’ll sometimes turn off the lights downstairs, then find we actually need to go downstairs for some reason. I have a scene and an automation I call Midnight Snack that turns on the lights in the kitchen and a couple other rooms, but only if the sensor detects motion at night. Here’s what that looks like.

When:

And if:

Then do:

Note that we’re doing the same thing as the previous automation – we’re activating a scene or turning on some lights, then immediately telling the Adaptive Lighting integration that these lights aren’t being manually controlled (we’re not interested in setting their color/brightness manually). This clears the way for them to become adaptive. When we go downstairs at night, everything will be orange and dim, which is ideal for keeping us sleepy. 😴

Dimmer Remotes

This was the toughest one to figure out – I found a few posts in the Home Assistant community about these, but for the most part had to figure them out for myself. Here’s the trick: if the lights are turned on in a way that specifies color or brightness, adaptive lighting won’t work. You’ll need to tell the lights to turn on without including any additional instructions. Unfortunately, I couldn’t find a way to do that in the Hue app, so the solution was to hand over control of the remote buttons to Home Assistant.

First, you’ll want to find your remote in the Hue app and tell it that you’re using something else to manage the buttons:

Tap the Configure in another app button at the bottom, then in the pop-up menu, Configure in another app.

From there, you’ll need to create automations for each button on your remote. The remote has four buttons – here’s how we use them:

- Toggle the lights on/off

- Increase brightness

- Decrease brightness

- Turn the lights off

In Home Assistant, go to Settings > Automations & scenes, then click the Create automation button. You’ll need to do this four times, one for each button. I recommend naming them Room Name – Button 1, Room Name – Button 2, etc.

Button 1

When:

And if: (leave empty)

Then do:

Button 2

When:

And if: (leave empty)

Then do:

Button 3

When:

And if: (leave empty)

Then do:

Button 4

When:

And if: (leave empty)

Then do:

That should do it. From this point on, when you press the top button to turn on the lights in a room, they should adapt immediately. If you press the button again, it’ll turn off the lights. Pressing the middle two buttons adjusts the brightness by 20% in either direction. Pressing the bottom button turns off the lights.

For some rooms, instead of targeting specific lights, I target all of the lights in the room. That works just fine. In this example, I wanted to exclude my Nanoleaf LED panels from the remote (since those are automated separately), so I only included the Hue lights.

Note: technically, you only need the first button to be controlled by Home Assistant – the two middle buttons will set manual control anyway (since you’re deviating from adaptive lighting), and the bottom button will turn the lights off. You could have Hue handle those buttons instead. However, we’ve seen issues where pressing the top button briefly turns on the lights, then they immediately turn off again. This led me to move all of the buttons to Home Assistant, for consistency.

Resetting Adaptive Lighting

Another trick is to include an easy way to set all of the lights back to adaptive. For example, if you turned up the brightness in a room temporarily, and wanted to reset things, this would give you a central place to do that. In Home Assistant, click Settings > Automations & scenes, click the Scripts tab at the top, and click the Create script button at the bottom. You only need one action:

Along with all of our scenes, I also have this script in Apple Home via the HomeKit Bridge integration. I made a room called All Scenes (which sorts first, alphabetically) and dropped all of our scenes and this script in there. They show up as switches that you can turn ‘on’ – they turn ‘off’ after a few seconds and can be selected again. They’re all available for Siri, too – you could say “Hey Siri, Good Evening” and she’ll toggle the switch, which activates the scene in Home Assistant.

Outro

As I mentioned, this took me months to figure out. We’re very happy with this setup, though, and the end result gives us far more flexibility than using HomeKit alone. I hope this post helps someone else get their lights under control, too!